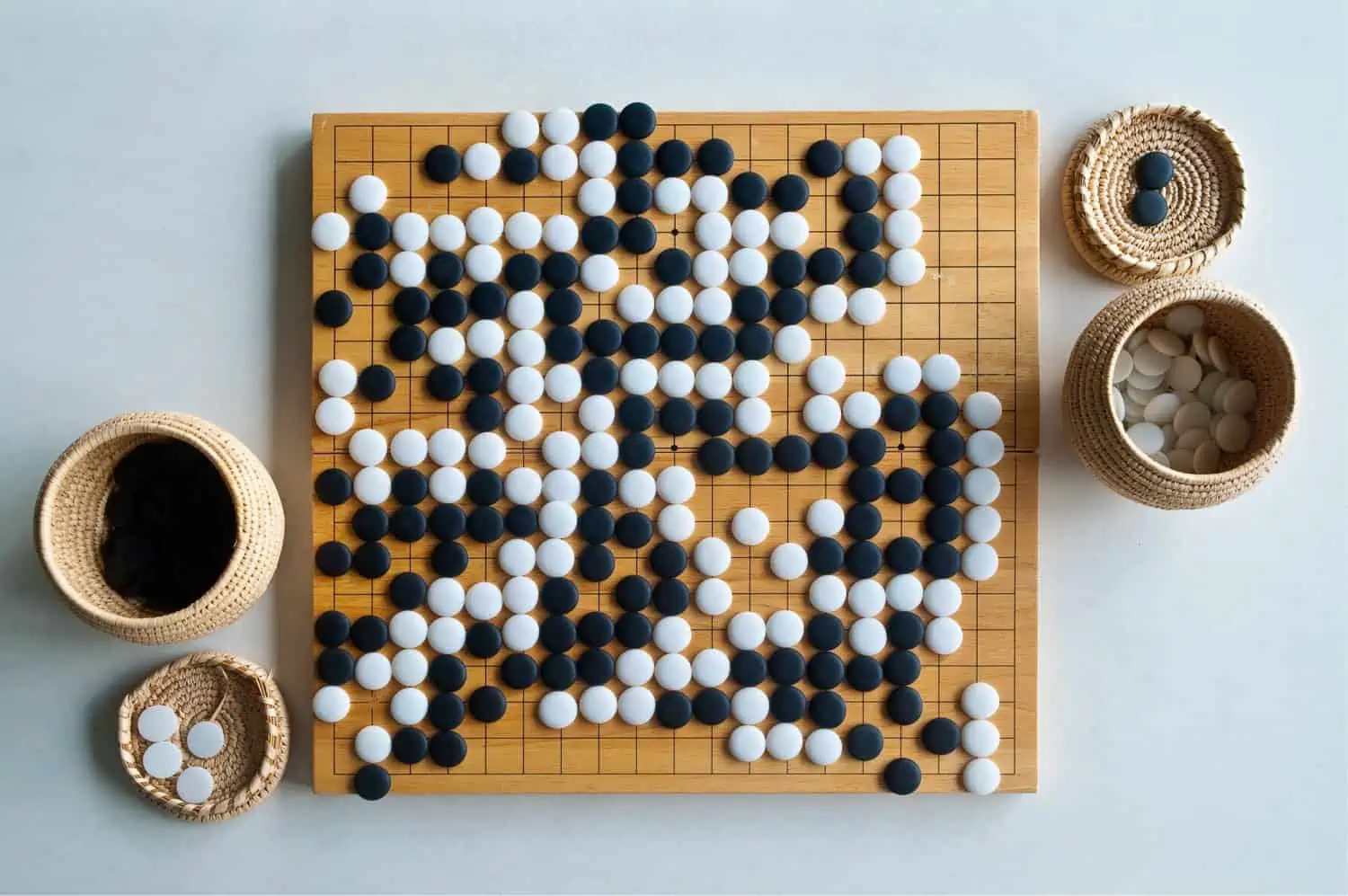

The Birth of AlphaGo – AI Beats a Human at Go!

Something incredible happened in March 2016. DeepMind’s AlphaGo, an AI built to play the ancient board game Go, defeated Lee Sedol, a 9-dan professional player and one of the greatest Go players in the world.

If you’ve never heard of Go before, it’s an ancient strategy game that’s far more complex than chess. While chess has around 10120possible board positions, Go has a mind-blowing 10170

That’s more than the number of atoms in the observable universe. 😮

For years, many researchers believed AI wouldn’t master Go for decades because traditional algorithms couldn’t handle its sheer complexity. Then along came AlphaGo, and everything changed. Let me tell you how this magical AI works and why it’s such a big deal.

image depicts a dramatic and symbolic moment showcasing the competition between AlphaGo, an AI program, and a legendary human Go player.

Table of Contents

- How AlphaGo Works: The Secret Sauce

- From Supervised Learning to Self-Play – The Reinforcement Learning Magic

- The Historic Match: AlphaGo vs Lee Sedol

- Why AlphaGo is a Big Deal

- What I Learned

- Final Thoughts

1. How AlphaGo Works: The Secret Sauce

AlphaGo combined two of the coolest ideas in AI:

- Deep Neural Networks (to learn patterns and positions like a human).

- Monte Carlo Tree Search (MCTS) (to make decisions like an efficient search engine).

1.1 Deep Neural Networks – Learning Patterns

At its core, AlphaGo uses two neural networks:

The Policy Network

- This network decides where to play next on the board.

- It was trained using supervised learning: AlphaGo studied thousands of games played by expert human Go players to predict their next moves.

- Think of it like AlphaGo learning the "intuition" of a professional player.

The Value Network

- This network predicts the winner of the game from any given board position.

- Instead of calculating every possible outcome (impossible in Go), the value network estimates which player is winning at any point.

- This helps AlphaGo focus only on promising moves.

The combination of these two networks gave AlphaGo an ability that felt almost human. It learned patterns, shapes, and strategies on the Go board—just like professional players.

1.2 Monte Carlo Tree Search (MCTS) – Calculating the Best Move

Once the neural networks provided their suggestions, AlphaGo used Monte Carlo Tree Search (MCTS) to explore and evaluate moves.

Here’s how MCTS works in simple terms:

- Start from the current board position.

- Explore different moves by simulating "what if" scenarios.

- For each move, roll out the game randomly until it ends.

- Use the Value Network to estimate which moves are good and narrow down the search.

Think of MCTS like a decision tree where AlphaGo looks ahead to see the possible outcomes of its next moves but focuses only on promising branches, not every possible move.

2. From Supervised Learning to Self-Play – The Reinforcement Learning Magic

After learning from expert games, AlphaGo did something even cooler: it started playing against itself!

This is called reinforcement learning. AlphaGo played millions of games with itself and improved every time. Each game was like a training session where it learned what worked and what didn’t.

Reinforcement learning can be thought of like this:

- AlphaGo tries a move (action).

- If the move leads to a win, it gets a "reward."

- If the move leads to a loss, it learns to avoid it in the future.

The more games it played, the stronger its understanding of Go became. By the time AlphaGo faced Lee Sedol, it had already played more games than any human could in a lifetime!

3. The Historic Match: AlphaGo vs Lee Sedol

The 5-game match between AlphaGo and Lee Sedol took place in March 2016. Here’s what happened:

- Game 1: AlphaGo won. The AI shocked everyone!

- Game 2: AlphaGo won again. People started wondering—can AI really be unbeatable?

- Game 3: AlphaGo won, sealing the series victory (3-0).

- Game 4: Lee Sedol made a legendary move (Move 78) and won. Even AI can make mistakes!

- Game 5: AlphaGo won the final game (4-1).

What fascinated me was Move 37 in Game 2. AlphaGo played an unexpected move that no human would consider—but it turned out to be brilliant. Professional players called it a move "from another dimension."

This showed that AI doesn’t just copy humans. It can innovate and find moves that humans might never think of.

4. Why AlphaGo is a Big Deal

- Combining Supervised and Reinforcement Learning

- AlphaGo started by learning from humans (supervised learning).

- Then it surpassed human knowledge through self-play (reinforcement learning).

- Monte Carlo Tree Search + Neural Networks

- By combining MCTS with deep learning, AlphaGo showed how AI can efficiently search through massive decision spaces.

- A New Era for AI

- AlphaGo’s success proved that AI can tackle complex problems and learn strategies on its own.

- This approach inspired later advancements like AlphaZero, which learned to master chess, Go, and shogi from scratch without human games.

5. What I Learned

AlphaGo is a perfect example of how combining different AI techniques (deep learning + reinforcement learning + tree search) can create something super powerful. It taught me that AI isn’t just about copying humans—it’s about pushing beyond human limits.

The math, the strategy, and the engineering behind AlphaGo are incredible. I’ve started reading about reinforcement learning algorithms like Q-Learning and Deep Q-Networks (DQN) because I think this is the future of AI.

6. Final Thoughts

Watching AlphaGo play was like seeing AI think for itself. It opened my eyes to what’s possible with machine learning.

One day, I hope to build my own AI program that can learn like AlphaGo. Maybe it won’t play Go, but it might solve problems in physics, medicine, or robotics. The possibilities are endless!